Member-only story

Machine Learning

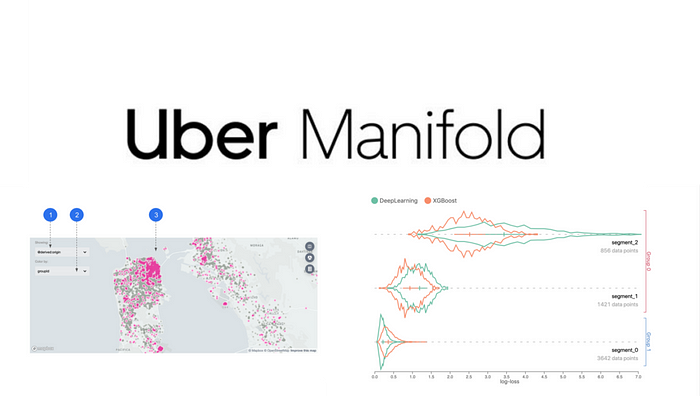

Inside Manifold: Uber’s Stack for Debugging Machine Learning Models

The open-source stack combines clever visualizations to detect insights about ML models.

I recently started an AI-focused educational newsletter, that already has over 100,000 subscribers. TheSequence is a no-BS (meaning no hype, no news etc) ML-oriented newsletter that takes 5 minutes to read. The goal is to keep you up to date with machine learning projects, research papers and concepts. Please give it a try by subscribing below:

Uber continues its amazing contributions to the machine learning open source community. From probabilistic programming languages like Pyro to low-code machine learning model tools like Ludwig, the transportation giant has been regularly releasing tools and frameworks that streamline the lifecycle of machine learning applications. Over a year ago, Uber announced that it was open sourcing Manifold, a model-agnostic visual debugging tool for machine learning models. The goal of Manifold is to help data scientists identify performance issues across datasets and models in a visually intuitive way.

Machine learning programs defer from traditional software applications in the sense that their structure is constantly changing and evolving as the model builds more knowledge. As a result, debugging and interpreting machine learning models is one of the most challenging aspects of real world artificial intelligence(AI) solutions. Debugging, interpretation and diagnosis are active areas of focus of organizations building machine learning solutions at scale. The challenge of debugging and interpreting machine learning models is nothing new and the industry has produced several tools and frameworks in this area. However, most of the existing stacks focus on evaluating a candidate model using performance metrics such as like log loss, area under curve (AUC), and mean absolute error (MAE) which, although useful, offer little insight in terms of the underlying reasons of the…